Technical Search Engine Optimization

Technical SEO refers to the basic way in which your website or webpage is prepared for Google and the other Search Engines. Technical SEO is a necessary evil. Evil because it takes time and necessary because if you do n0t do it then you will have trouble getting Google taking you seriously.

There has probably been more misinformation about SEO than any other topic related to web development and marketing. It is a lucrative business and as is the case with many similar situations where the client does not have a good knowledge base, many have been taken for a ride.

Traditionally SEO is broken into two distinct elements:

- Onpage SEO that refers to the code that is behind your page

- Offpage SEO that refers to the links that point at your web page.

Offpage SEO

Offpage SEO used to all about getting links or link building for web pages. In the early days there were complete suites of software built around identifying webmasters that would interchange links with you in so-called reciprocal linking. The Holy Grail was the no reciprocal link form a page with a high Page Rank. Buying links from such pages and other dirty deeds were the way to skip the pain. In 2012 we spoke to a Venture Capital Incubator in Madrid that claimed that by simply putting a link to a webpage for a specific keyword into the over 1,000 websites they had spawned, they could assure that the new website was ranked number 1 or close to it the following morning. You can see why Google began to use other factors and those days are now gone.

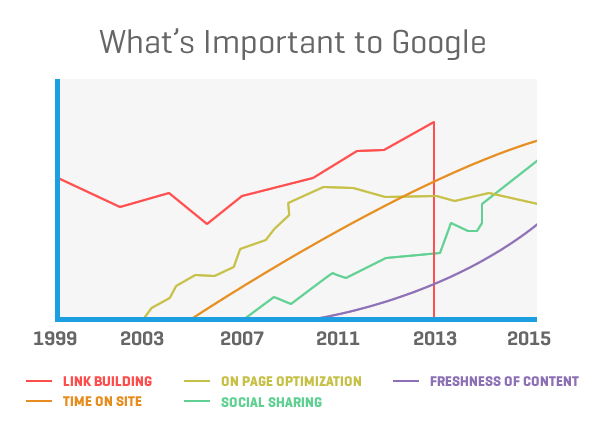

Neil Patel has put together a good graph that shows how Google started perceiving Offpage SEO:

What we can see is the demise of link building and the increase in factors such as the time on the site, the social sharing and the freshness of the content. All of these then are to do about the content, making it interesting (=fresh) so someone reads the long form article (=time on site) and then shares it (=social sharing).

Onpage SEO

This is all about the code. If you don’t have the correct elements in your webpage code then you won’t get highly ranked – it is really that simple. It is a threshold condition. You just need to do it.

It is not magic. There is no secret formula – it is just good coding. Frankly, anyone that builds a website these days should know exactly what it is that they have to put into a webpage so that it can rank highly.

However, there are hundreds of potential bits of codes and website ups and downs that can be considered and sometimes over-engineering a page can be detrimental. seoflow recently published some findings on what out of the 200 or so elements of the Google algorithm seem to have a high correlation with good ranking.

This is what we distilled out of their findings and that jive with our own experience:

- keyword in Title tag

- keyword at start of Title

- keyword in URL

- https – i.e. the use of an SSL certificate (makes sure all the images are in the https as well!)

- .com instead of any other top level domain (tld). The exception might be for a national domain such as .nl or .es when marketing to that geography

- url length of < 18 characters (after the domain)

- < 2000kb per page (including images)

- Google PageSpeed of > 63

- Google Usuability > 92

Get these elements right, as well as captivating content, of course, you stand a good chance of getting ranked on the first page.

Again they just make sense for a good web experience: Easy to identify what a page is about (=the titles and the URL content and length), safe to use (=https), quick to load (=the total weight and the Page Speed) and easy to read (= the Usability). We just need to put ourselves in Google’s shoes – which page of the 100o’s about their keyword is going to give the best experience to the person searching.

OnPage SEO is correctly programming the individual pages of your website so that you give a clear picture of the page to search engines and that they can easily interpret it and rank it accordingly. I like to assume Google is, er… dumb. Sorry, Google. In fact Google is probably one of the best examples of AI around but it is still relatively crude.

First of Google can only interpret what you give it to interpret. If you miss an important element of the code out then it simply will not be able to give a positive point for that element and even worse if your competitor does place that element in their page then they WILL rank higher than you. So with OnPage SEO, the devil is in the detail.

Once again if we write good articles for real people to read, then we are well on the way to providing Google with the required text it needs to analyse. Given that we are going to be writing at least some long-form articles that people are interested in, then the article is going to be keyword rich not only for the short phrases but also for long-tail keyword phrases, synonyms, metaphors, related terms and plenty of semantic material for Google to get its teeth into.

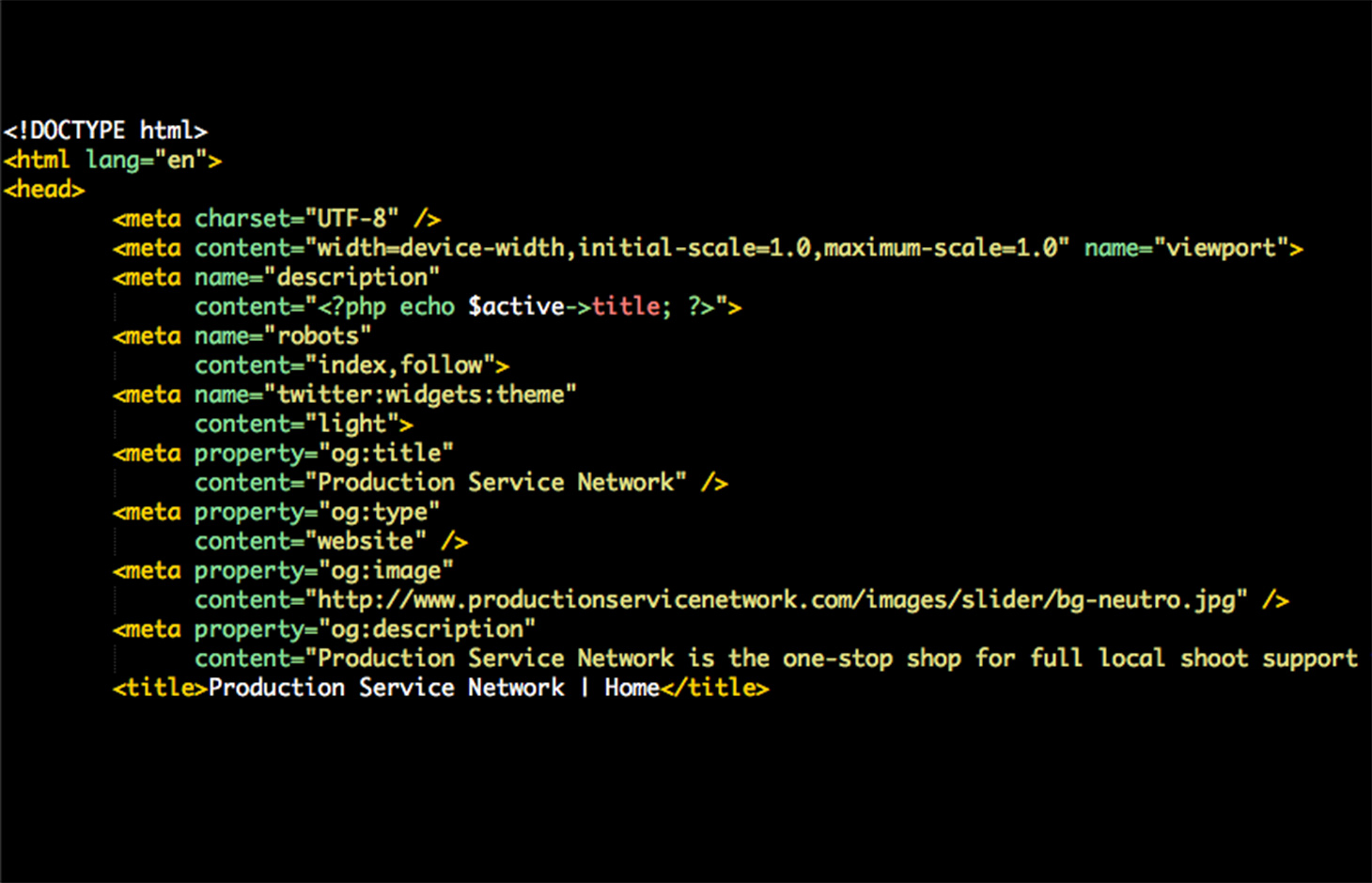

Nonetheless, there are literally hundreds of other elements that you have to look at in order to get right. These are the usual suspects like titles, the canonical URLs, the meta tags, the URL structure, the broken links etc. etc. On average it takes between 3 – 7 hours to ensure that a page is correctly optimised. If you are using a CMS or pages with similar structure or an inserted head file then this time can be reduced but building correctly in the first place is clearly the correct approach.

It is also easy to forget that normally the page speed is determined by the page contents and ensuring that the javascript and CSS files, as well as other imports, are placed correctly on the page is extremely important. Using Google’s Page Insights can help tremendously but here there can be conflicts between functionality and page speed. One nasty effect, for example, is the so-called page flash was the CSS loads later. It requires skill to ensure that everything is loaded optimally.

What is OnPage Social Media Optimisation?

Equally as important for content marketing is OnPage optimisation for Social Media. Facebook, Twitter and Pinterest have protocols for determining the content to show when someone shares your page.

Facebook’s OpenGraph is becoming a quasi-standard but clearly the main advantage is for Facebook itself. Twitter is similar but different taking the information to build a Twitter Card: General for both networks each page requires to have certain data declared in the head ion the page:

- The canonical URL – the same as Google uses

- The type of data (webpage, video etc)

- The local or language

- The name and site of the page

- The page description – similar to the meta description

- The image you want to show when the page is shared

- Details about the creator

- Details about video (particularly in the case of Twitter)

Both networks have test URLs where you can emulate what will be shown. Given the importance of Social media to inbound content marketing, it is vital that this element of the OnPage optimisation is not left out.

How to make sure Google finds you and indexes your site

Finally when all the details have been finished the site is there but we need to make sure that Google knows about it.

Given that one of the premises of Content Marketing is that other sites will link to your article because of your authority, then Google could be left to follow those links. However, nobody is that patient and it is simply not a good idea to be passive rather than active.

There are two fields that require special attention. First of all that Google can index the page and that we are not blocking to fro t eh search engines. The key file is, of course, the robots.txt file. This blocks search bots from indexing parts of the web we don’t want them to such as the login page to the administrator of a CMS.

They are only advisory and some search engines will index everything. The use of a no-index meta tag or directive is a better way of stopping the search engines from indexing a page but the only real way is to password protect the directory or page.

One of the uses of robots.txt file sis to stop a page from being indexed until is fully finished. This is great for developing pages online and being able to view them prior to letting them loose on an unsuspecting public. We have often seen that webmasters or editors forget to take out or change the robots files or remove the no-index tag. This can lead to some very delusional marketers!

Sitemap SEO

Finally, there is the sitemap. This is now a good method of letting Google know what it is that you want to show them. Using Google’s newly named Search Console (it will always be Webmaster’s Tools to us!) this sitemap can be sent to Google. It needs to be updated to reflect new content and removed content. If the website is updated often it is probably a good idea to write a script to maintain the sitemap up to date.

Creating and implementing a proper sitemap is a critical aspect of search engine optimization that will guide your entire campaign, and set the tone for how Google, and other search engines, will navigate your website.

Start by identifying your website type. There are vast differences between local businesses, e-commerce websites, and publishers, and multi-location businesses.

Once you know which type of website you are looking to create, or optimize, you can begin implementing the proper site structure to improve search engine robot’s crawl-ability and the way link juice flows through your website.

This includes site architecture, link juice flow, website silos, etc.

Consult a seo specialist or agency if you need additional help developing your website sitemap.

How can a poor or old server affect SEO?

One of the key elements to making your website perform is the Server. There are essentially 2 factors of the server environment that can affect your SEO performance:

Pages at the speed of light

The speed at which your page is loaded is in part at least due to your server and generally due to the configuration of the software as well as the memory and computing power that it has. If a page loads slowly the user gets a poorer experience – especially over mobile. In fact as far as Google is concerned it is the time that the site or pages take to crawl that has a direct relationship to the search engine results. The difference is subtle but you still need to have a fast server.

Sometimes the connectivity of the server to the internet is also an issue and the uptime of the server is a clear issue – if Google can’t get your content it is not going to rank very well! Obviously the uptime for your server has to be perfect.

Perhaps, more importantly, is the use of server caching. This can make a huge difference in the time it takes for the page to be shown. Perhaps more precisely we should say the speed of the page that is in the cache is shown. If that page has been updated since the cache was taken, the user won’t see the latest version of the page. More importantly, if the resource they are looking for is not there then they have to wait for the server to look for the resource and then serve it up from a different location and this can take longer overall. The ability for servers to compress content is also a key element in making pages load faster. This is more often than not a question of programming key files that are not part of the web as such but give the server the instruction to compress the output.

The server location will also have an effect on how fast data takes to travel to the visitor. There is also a weak signal to Google about the audience you want to attract. If you have a .nl site in a server in the Netherlands they probably realise that your market is in the Netherlands.

Keep them bugs outs

Web pages can be hacked. In fact, Google blacklists about 20,000 websites a week for containing malware and a further 50,000 a week for phishing. If you use Content Management Systems such as WordPress or Joomla you are more likely to get hacked and your webmaster will need to make sure that the web is kept totally up to date – constantly.

The consequences of a hack can be manifold:

- your web can be sending spam out with will probably have the effect of putting your domain on a blacklist preventing you sending email

- the data that you gather from the web can be stolen

- the data that you have stored in the server can be stolen – a dreaded data breach which will negatively impact your authority and trust

- there are hacks that will piggyback your rankings sending visitors to other sites

- being hacked will result in Google writing a warning in the search results that the web is hacked or if the user has Chrome will server the red page advising not to visit your site. This is will result in a dramatic loss of traffic in the short and medium-term.

Solid firewalls and real-time virus scanning are essential.